Research Interests

While I am broadly interested in topics related to programming languages (PL) and software engineering (SE), my current focus is on program analysis and its interplay with machine learning (ML) and artificial intelligence (AI). On one hand, I leverage ideas from ML/AI to build better program analyses. On the other hand, I develope program analyses and languages for improving intepretability, fairness, robustness, and safety of ML/AI systems. Most of my projects can be classified into one of these two directions.

Projects in PL/SE → ML/AI

Explaining Neural Network Judgments Using Corrections

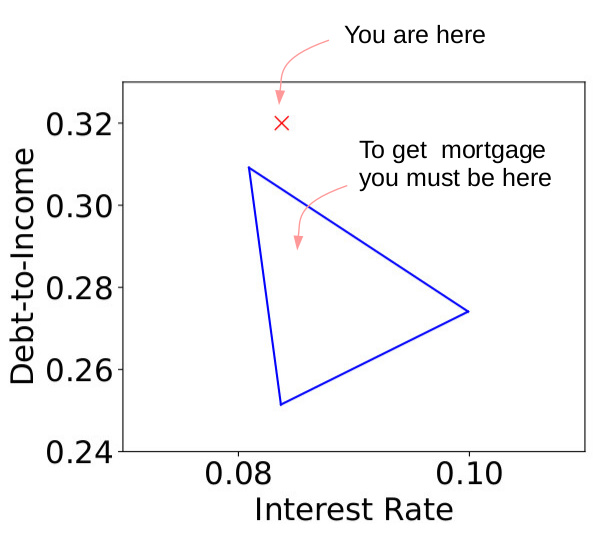

We developed a new program analysis that generates corrections as actionable explanations when a judgment made by a neural network is undesirable to a user. To ensure that it would be easy for the user to incorporate these explanations, our corrections are minimal, stable, and symbolic. For example, if a neural network rejects a user's mortgage application, our approach will generate explanations such as "you will get approved if you increase your credit score by at least 30 and salary by $200". Our algorithm generates such corrections for feedforward networks with ReLU activations by solving a sequence of linear programming problems.

We demonstrate the effectiveness our technique on three networks: one predicting whether an applicant will pay a mortgage, one predicting whether a first-order theorem can be proved efficiently by a solver using certain heuristics, and the final one judging whether a drawing is an accurate rendition of a canonical drawing of a cat.

Paper(s): [ NeurIPS'18 ]

Scalable Verification of Algorithmic Fairness

As machine learning systems are increasingly used to make real world legal and financial decisions, it is of paramount importance that we develop algorithms to verify that these systems do not discriminate against minorities. We design the first scalable algorithm for verifying fairness specifications. Our algorithm obtains strong correctness guarantees based on adaptive concentration inequalities; such inequalities enable our algorithm to adaptively take samples until it has enough data to make a decision.

We implement our algorithm in a tool called VeriFair, and show that it scales to large machine learning models, including a deep recurrent neural network that is more than five orders of magnitude larger than the largest previously-verified neural network.While our technique only gives probabilistic guarantees due to the use of random samples, we show that we can choose the probability of error to be extremely small.

Paper(s): [ OOPSLA'19 ]

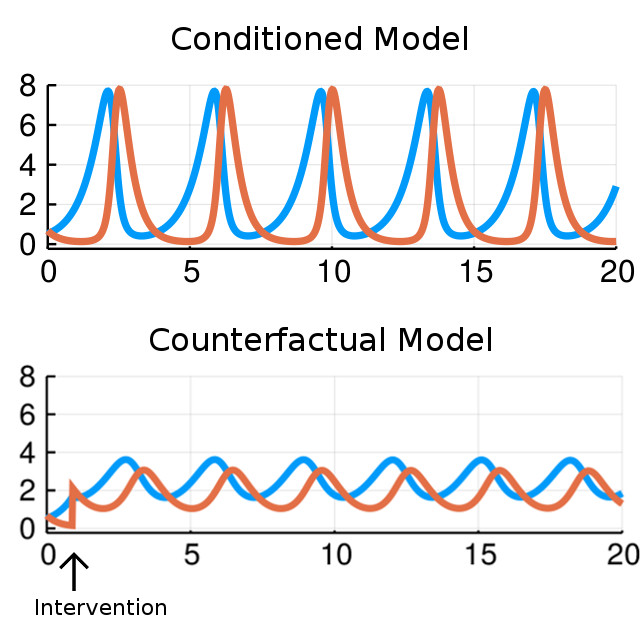

Omega: A Probabilistic Programming Language for Uncertain Distributional Properties and Causality

Probabilistic programming has become an emerging topic in ML and PL due to its abilities to a) succinctly construct arbitrary distributions, and b) condition rich predicates. Omega further improves the expressiveness by adding two new features:

a) Omega allows conditioning on distributional properties, which summarize the whole output distribution rather than a single sample. Example properties include expectations, variances, KL-divergence, and expressions that compose them. This enables many important applications such as inferring machine learning models that are fair or robust. There are two main challenges: 1) conditioning distributional properties is a semantic error as they are not random variables, and 2) there can be multiple ways to change the distributions to satisfy these properties. We introduce a new language construct called Random Conditional Distributions to address them. We demonstrate the effectiveness of our approach by repairing classifiers that are not robust and classifiers that are unfair.b) Omega adds support for interventions and counterfactuals. They are the two basic operators for causal inference which is another emerging trend in ML as existing models are good at capturing correlations but not causality. We implement these two operators by applying lazy evaluation and demonstrate their effectiveness on examples in population dynamics, inverse planning, and causation.

Paper(s): [ Tech Report 1 ] [ Tech Report 2 ]

Certified Control for Self-Driving Cars

Certified control is a new architectural pattern for achieving high assurance of safety in autonomous cars. As with a traditional safety controller or interlock, a separate component oversees safety and intervenes to prevent safety violations. This component (along with sensors and actuators) comprises a trusted base that can ensure safety even if the main controller fails. But in certified control, the interlock does not use the sensors directly to determine when to intervene. Instead, the main controller is given the responsibility of presenting the interlock with a certificate that provides evidence that the proposed next action is safe. The interlock checks this certificate, and intervenes only if the check fails. Because generating such a certificate is usually much harder than checking one, the interlock can be smaller and simpler than the main controller, and thus assuring its correctness is more feasible. More information can be found at https://www.csail.mit.edu/research/interlock-self-driving-cars .

Paper(s): [ DARS'19 ]

Projects in ML/AI → PL/SE

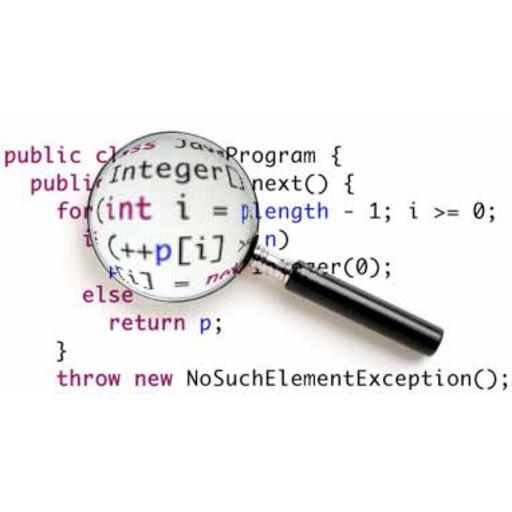

Adaptive Program Analysis by Combining Logical and Probabilistic Reasoning

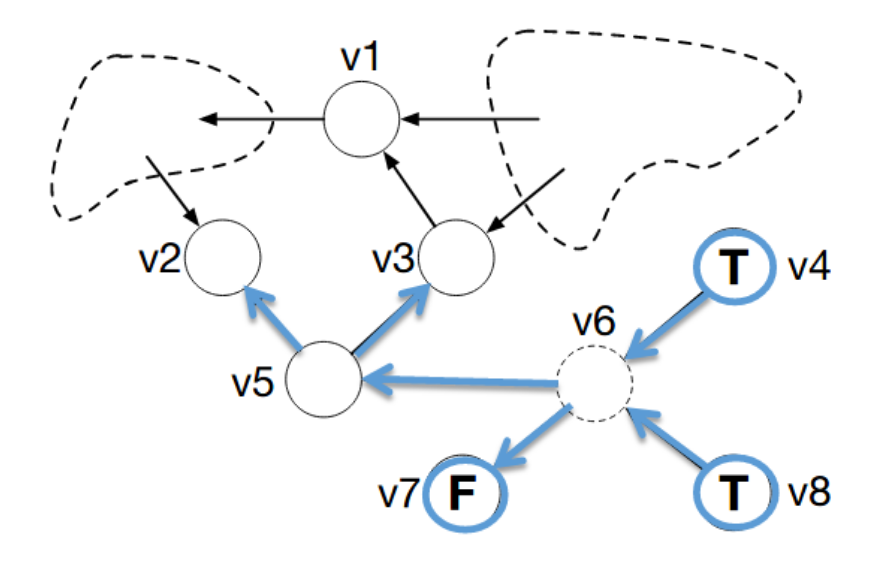

Approximations are necessary evils in program analyses as any nontrivial analysis problem is undecidable. A key challenge is how to choose an approximation that balances various tradeoffs such as precision vs. scalability and false positive rate vs. false negative rate. Our key insight is that it is unviable to find a one-size-fits-all approximation for all usage scenarios, so instead we on the fly synthesize a specialized approximation that is optimal for each individual usage scenario. We propose a unified framework that automatically encodes this problem as a system of mixed logical and probabilistic constraints. While the logical part encodes the space of correct approximations, the probabilistic part encodes uncertainties that come from different usage scenarios.

We implemented several typical adaptivity tasks inside the framework including adapting to individual assertions of interest, adapting to user feedback, and adapting to patterns of procedure reuses within and across programs. We demonstrate the effectiveness of our approach on important analyses such as pointer analysis, type-state analysis, and race detection by improving their precision and scalability significantly.

Paper(s): [ PLDI'13 ] [ PLDI'14a ] [ PLDI'14b ] [ FSE'15 ] [ OOPSLA'16 ] [ OOPSLA'17 ]

Nichrome: A Scalable Solver for Mixed Hard And Soft Constraints

Mixed hard and soft constraints have been increasingly used in program analyses: while the hard constraints encode soundness requirements, the soft constraints encode different optimization objectives. Although there exist solvers and algorithms for such constraints, program analysis applications pose new challenges. On one hand, exact solvers such as Z3 have difficulties scaling to large constraints (upto 10^30 clauses) generated from these applications. On the other hand, fast and approximate solvers such as Tuffy and Alchemy can violate hard constraints which is unacceptable for verification tasks.

To address these challenges, we propose several new solving techniques by leveraging domain insights and implemented them in a solver called Nichrome. These techniques include iterative-lazy solving, query-guided solving, and incremental solving. We showed that our solver not only outperforms existing solvers on constraints generated from program analysis, but also on constraints generated from applications in information retrieval, artificial intelligence, and other domains.